Gen AI Application Evaluation with OpenAI Platform: A Practical Tutorial

Before deploying your application to production, it is critical to run evaluations to make sure your application prompt is accurate and reliable. In this post, let us learn how to run automated evaluations on our Gen AI application “ClearComms” using the Open AI API platform. (what is ClearComms? — see here).

First and foremost, create an account on the OpenAI platform and get an API key.

Test Data

Here is the ClearComms Sample Data with input/output pairs in a Google sheet.

Evaluation Steps

Step 1: Provide the system prompt in the “Playground” tab and dry run with some test data

Option1: Use Gen AI to create your prompt

Option2: If you already have a prompt, just use it directly

Step 2: Create a new evaluation and import the test data into the platform

Under the Dashboard tab, click evaluations in the left side panel to create a new evaluation

Import the test data into the platform

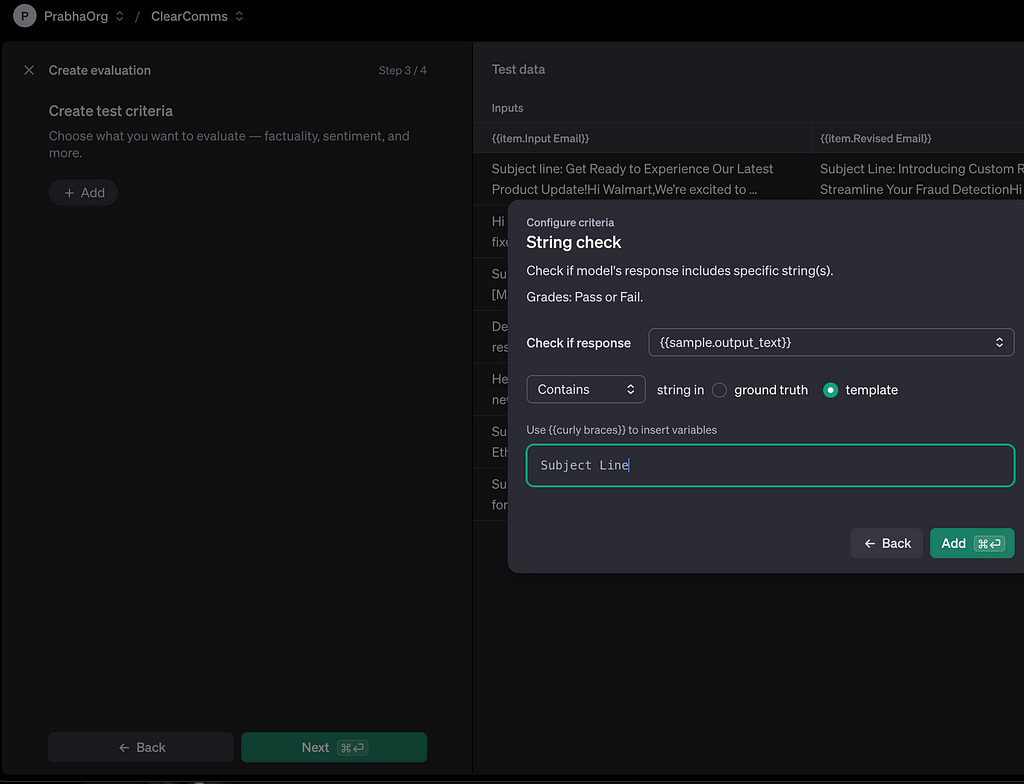

Step 3: Create some test criteria

I have created string checks, semantic checks and criteria match checks. Here are the configurations of some of these checks I used for those evaluation criteria —

Semantic Similarity Check

Salutation Check

Signature Check

String Check

Step 4: Run the tests

Click the Run button below to run the test criteria against the Google sheet test data

Evaluation Round 1

Evaluation Summary

Evaluation detailed results: Some tests failed!

Evaluation Round 2

I refined the prompt a couple of times to handle the checks and re-ran the tests.

Evaluation Summary:

Evaluation Detailed Results:

All tests passed!

_________________________________

How to build ClearComms App

Coming soon…